Open-source know-how developed within the civilian sector has the capability to even be utilized in army functions or be merely misused. Navigating this dual-use potential is changing into extra vital throughout engineering fields, as innovation goes each methods. Whereas the “openness” of open-source know-how is a part of what drives innovation and permits everybody entry, it additionally, sadly, means it’s simply as simply accessible to others, together with the army and criminals.

What occurs when a rogue state, a non-state militia, or a faculty shooter shows the identical creativity and innovation with open-source know-how that engineers do? That is the query we’re discussing right here: how can we uphold our ideas of open analysis and innovation to drive progress whereas mitigating the inherent dangers that include accessible know-how?

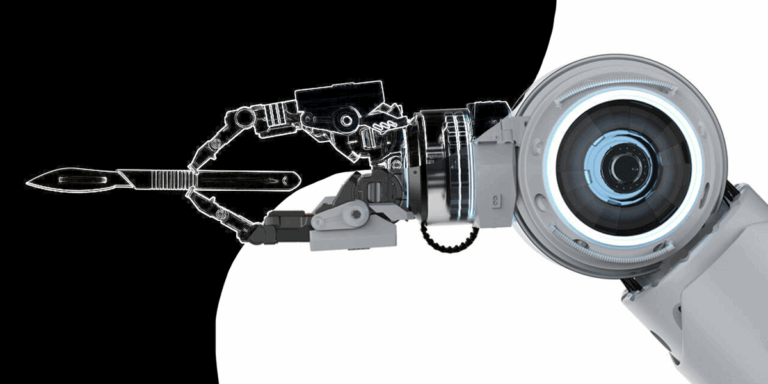

Extra than simply open-ended danger, let’s talk about the precise challenges open-source know-how and its dual-use potential have on robotics. Understanding these challenges may help engineers study what to search for in their very own disciplines.

The Energy and Peril of Openness

Open-access publications, software program, and academic content material are elementary to advancing robotics. They’ve democratized entry to information, enabled reproducibility, and fostered a vibrant, collaborative worldwide group of scientists. Platforms like Arxiv and Github and open-source initiatives just like the Robot Operating System and the Open Dynamic Robot Initiative have been pivotal in accelerating robotics research and innovation, and there’s no doubt that they need to stay brazenly accessible. Shedding entry to those assets can be devastating to the robotics discipline.

Nevertheless, robotics carries inherent dual-use dangers since most robotics know-how may be repurposed for military use or harmful purposes. One latest instance of custom-made drones in present conflicts is especially insightful. The resourcefulness displayed by Ukrainian troopers in repurposing and typically augmenting civilian drone technology acquired worldwide, typically admiring, information protection. Their creativity has been made doable by means of the affordability of business drones, spare elements, 3D printers, and the supply of open-source software program and {hardware}. This enables folks with little technological background and cash to simply create, management, and repurpose robots for army functions. One can actually argue that this has had an empowering impact on Ukrainians defending their nation. Nevertheless, these similar situations additionally current alternatives for a variety of potential unhealthy actors.

Overtly out there information, designs, and software program may be misused to reinforce present weapons programs with capabilities like vision-based navigation, autonomous targeting, or swarming. Moreover, until correct safety measures are taken, the general public nature of open-source code makes it susceptible to cyberattacks, doubtlessly permitting malicious actors to realize management of robotic programs and trigger them to malfunction or be used for malevolent purposes. Many ROS customers already acknowledge that they don’t make investments sufficient in cybersecurity for his or her functions.

Steering is Vital

Twin-use dangers stemming from openness in analysis and innovation are a priority for a lot of engineering fields. Do you know that engineering was initially a military-only exercise? The phrase “engineer” was coined within the Center Ages to explain “a designer and constructor of fortifications and weapons.” Some engineering specializations, particularly people who embody the event of weapons of mass destruction (chemical, organic, radiological, and nuclear), have developed clear steering, and in some circumstances, laws for a way analysis and innovation may be carried out and disseminated. In addition they have community-driven processes meant to mitigate dual-use dangers related to spreading information. As an illustration, BioRxiv and MedRxiv –the preprint servers for biology and well being sciences– display screen submissions for materials that poses a biosecurity or well being danger earlier than publishing them.

The sector of robotics, compared, affords no particular regulation and little steering as to how roboticists ought to consider and handle the dangers related to openness. Twin-use danger isn’t taught in most universities, regardless of it being one thing that college students will possible face of their careers, reminiscent of when assessing whether or not their work is topic to export control regulations on dual-use items.

In consequence, roboticists might not really feel incentivized or outfitted to judge and mitigate the dual-use dangers related to their work. This represents a significant drawback, because the probability of hurt related to the misuse of open robotic analysis and innovation is probably going greater than that of nuclear and organic analysis, each of which require considerably extra assets. Producing “do-it-yourself” robotic weapon programs utilizing open-source design and software program and off-the-shelf business parts is definitely comparatively simple and accessible. With this in thoughts, we predict that it’s excessive time for the robotics group to work towards its personal set of sector-specific steering for a way researchers and firms can finest navigate the dual-use dangers related to the open diffusion of their work.

A Roadmap for Accountable Robotics

Hanging a steadiness between safety and openness is a posh problem, however one which the robotics group should embrace. We can not afford to stifle innovation, nor can we ignore the potential for hurt. A proactive, multi-pronged strategy is required to navigate this dual-use dilemma. Drawing classes from different fields of engineering, we suggest a roadmap specializing in 4 key areas: schooling, incentives, moderation, and crimson traces.

Training

Integrating accountable analysis and innovation into robotics education in any respect ranges is paramount. This consists of not solely devoted programs but in addition the systematic inclusion of dual-use and cybersecurity concerns inside core robotics curricula. We should foster a tradition of responsible innovation in order that we will empower roboticists to make knowledgeable choices and proactively handle potential dangers.

Academic initiatives may embody:

Incentives

Everybody must be inspired to evaluate the potential unfavourable penalties of creating their work absolutely or partially open. Funding companies can mandate danger assessments as a situation for venture funding, signaling their significance. Skilled organizations, just like the IEEE Robotics and Automation Society (RAS), can undertake and promote best practices, offering instruments and frameworks for researchers to establish, assess, and mitigate dangers. Such instruments may embody self-assessment checklists for particular person researchers and steering for a way schools and labs can arrange moral evaluation boards. Tutorial journals and conferences could make peer-review danger assessments an integral a part of the publication course of, particularly for high-risk functions.

Moreover, incentives like awards and recognition applications can spotlight exemplary contributions to risk assessment and mitigation, fostering a tradition of accountability inside the group. Threat evaluation will also be incentivized and rewarded in additional casual methods. Folks in management positions, reminiscent of PhD supervisors and heads of labs, may construct advert hoc alternatives for college kids and researchers to debate doable dangers. They’ll maintain seminars on the subject and supply introductions to exterior specialists and stakeholders like social scientists and specialists from NGOs.

Moderation

The robotics group can implement self-regulation mechanisms to average the diffusion of high-risk materials. This might contain:

- Screening work previous to publication to forestall the dissemination of content material posing severe dangers.

- Implementing graduated entry controls (“gating”) to sure source code or information on open-source repositories, doubtlessly requiring customers to establish themselves and specify their meant use.

- Establishing clear pointers and group oversight to make sure transparency and stop misuse of those moderation mechanisms. For instance, organizations like RAS may design classes of danger ranges for robotics analysis and functions and create a monitoring committee to trace and doc actual circumstances of the misuse of robotics analysis to know and visualize the size of the dangers and create higher mitigation methods.

Purple Traces

The robotics group must also search to outline and implement crimson traces for the event and deployment of robotics applied sciences. Efforts to outline crimson traces have already been made in that course, notably within the context of the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems. Firms, together with Boston Dynamics, Unitree, Agility Robotics, Clearpath Robotics, ANYbotics, and Open Robotics wrote an open letter calling for laws on the weaponization of general-purpose robots. Sadly, their efforts have been very slim in scope, and there’s a lot of worth in additional mapping end-uses of robotics that must be deemed off-limits or demand further warning.

It should completely be tough for the group to agree on normal crimson traces, as a result of what is taken into account ethically acceptable or problematic is extremely subjective. To help the method, people and firms can replicate on what they contemplate to be unacceptable use of their work. This might lead to insurance policies and phrases of use that beneficiaries of open analysis and open-source design software program must formally comply with (reminiscent of specific-use open-source licenses). This would supply a foundation for revoking entry, denying software program updates, and doubtlessly suing or blacklisting individuals who misuse the know-how. Some corporations, together with Boston Dynamics, have already carried out these measures to some extent. Any particular person or firm conducting open analysis may replicate this instance.

Openness is the important thing to innovation and the democratization of many engineering disciplines, together with robotics, nevertheless it additionally amplifies the potential for misuse. The engineering group has a accountability to proactively handle the dual-use dilemma. By embracing accountable practices, from schooling and danger evaluation to moderation and crimson traces, we will foster an ecosystem the place openness and safety coexist. The challenges are important, however the stakes are too excessive to disregard. It’s essential to make sure that analysis and innovation profit society globally and don’t turn into a driver of instability on the planet. This aim, we consider, aligns with the mission of the IEEE, whose mission is to “advance know-how for the advantage of humanity.” The engineering group, particularly roboticists, must be proactive on these points to forestall any backlash from society and to preempt doubtlessly counterproductive measures or worldwide laws that might hurt open science.