Discovering a greater approach

Each time an Amsterdam resident applies for advantages, a caseworker evaluations the appliance for irregularities. If an software appears to be like suspicious, it may be despatched to town’s investigations division—which may result in a rejection, a request to appropriate paperwork errors, or a suggestion that the candidate obtain much less cash. Investigations also can occur later, as soon as advantages have been dispersed; the end result could power recipients to pay again funds, and even push some into debt.

Officers have broad authority over each candidates and present welfare recipients. They will request financial institution data, summon beneficiaries to metropolis corridor, and in some instances make unannounced visits to an individual’s dwelling. As investigations are carried out—or paperwork errors mounted—much-needed funds could also be delayed. And infrequently—in additional than half of the investigations of functions, in keeping with figures offered by Bodaar—town finds no proof of wrongdoing. In these instances, this could imply that town has “wrongly harassed individuals,” Bodaar says.

The Good Examine system was designed to keep away from these eventualities by finally changing the preliminary caseworker who flags which instances to ship to the investigations division. The algorithm would display the functions to determine these more than likely to contain main errors, primarily based on sure private traits, and redirect these instances for additional scrutiny by the enforcement group.

If all went effectively, town wrote in its inside documentation, the system would enhance on the efficiency of its human caseworkers, flagging fewer welfare candidates for investigation whereas figuring out a larger proportion of instances with errors. In a single doc, town projected that the mannequin would forestall as much as 125 particular person Amsterdammers from going through debt assortment and save €2.4 million yearly.

Good Examine was an thrilling prospect for metropolis officers like de Koning, who would handle the undertaking when it was deployed. He was optimistic, for the reason that metropolis was taking a scientific strategy, he says; it might “see if it was going to work” as an alternative of taking the perspective that “this should work, and it doesn’t matter what, we’ll proceed this.”

It was the form of daring concept that attracted optimistic techies like Loek Berkers, an information scientist who labored on Good Examine in solely his second job out of faculty. Talking in a restaurant tucked behind Amsterdam’s metropolis corridor, Berkers remembers being impressed at his first contact with the system: “Particularly for a undertaking throughout the municipality,” he says, it “was very a lot a form of revolutionary undertaking that was making an attempt one thing new.”

Good Examine made use of an algorithm known as an “explainable boosting machine,” which permits individuals to extra simply perceive how AI fashions produce their predictions. Most different machine-learning fashions are sometimes considered “black containers” operating summary mathematical processes which are arduous to grasp for each the staff tasked with utilizing them and the individuals affected by the outcomes.

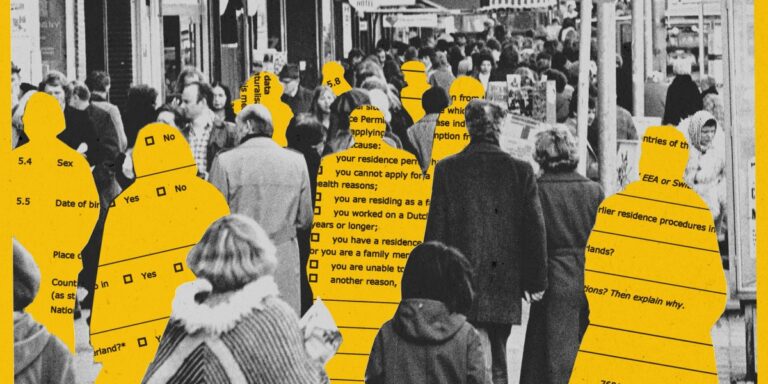

The Good Examine mannequin would think about 15 traits—together with whether or not candidates had beforehand utilized for or obtained advantages, the sum of their property, and the variety of addresses they’d on file—to assign a danger rating to every individual. It purposefully prevented demographic elements, akin to gender, nationality, or age, that had been thought to result in bias. It additionally tried to keep away from “proxy” elements—like postal codes—that won’t look delicate on the floor however can grow to be so if, for instance, a postal code is statistically related to a specific ethnic group.

In an uncommon step, town has disclosed this info and shared a number of variations of the Good Examine mannequin with us, successfully inviting exterior scrutiny into the system’s design and performance. With this knowledge, we had been capable of construct a hypothetical welfare recipient to get perception into how a person applicant could be evaluated by Good Examine.

This mannequin was educated on an information set encompassing 3,400 earlier investigations of welfare recipients. The thought was that it might use the outcomes from these investigations, carried out by metropolis staff, to determine which elements within the preliminary functions had been correlated with potential fraud.