Be part of our each day and weekly newsletters for the most recent updates and unique content material on industry-leading AI protection. Learn More

The whale has returned.

After rocking the worldwide AI and business community early this year with the January 20 preliminary launch of its hit open source reasoning AI model R1, the Chinese language startup DeepSeek — a by-product of previously solely domestically well-known Hong Kong quantitative evaluation agency Excessive-Flyer Capital Administration — has released DeepSeek-R1-0528, a major replace that brings DeepSeek’s free and open mannequin close to parity in reasoning capabilities with proprietary paid fashions comparable to OpenAI’s o3 and Google Gemini 2.5 Professional

This replace is designed to ship stronger efficiency on advanced reasoning duties in math, science, enterprise and programming, together with enhanced options for builders and researchers.

Like its predecessor, DeepSeek-R1-0528 is out there beneath the permissive and open MIT License, supporting industrial use and permitting builders to customise the mannequin to their wants.

Open-source mannequin weights are available via the AI code sharing community Hugging Face, and detailed documentation is supplied for these deploying domestically or integrating through the DeepSeek API.

Current customers of the DeepSeek API will robotically have their mannequin inferences up to date to R1-0528 at no extra value. The present value for DeepSeek’s API is $0.14 per 1 million enter tokens throughout common hours of 8:30 pm to 12:30 pm (drops to $0.035 throughout low cost hours). Output for 1 million tokens is persistently priced at $2.19.

For these seeking to run the mannequin domestically, DeepSeek has printed detailed directions on its GitHub repository. The corporate additionally encourages the group to supply suggestions and questions by way of their service electronic mail.

Particular person customers can strive it totally free by way of DeepSeek’s website here, although you’ll want to supply a cellphone quantity or Google Account entry to sign up.

Enhanced reasoning and benchmark efficiency

On the core of the replace are vital enhancements within the mannequin’s potential to deal with difficult reasoning duties.

DeepSeek explains in its new mannequin card on HuggingFace that these enhancements stem from leveraging elevated computational sources and making use of algorithmic optimizations in post-training. This method has resulted in notable enhancements throughout numerous benchmarks.

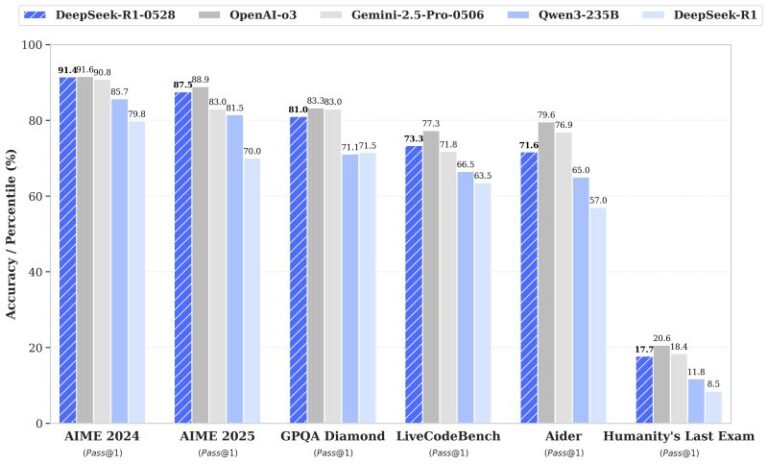

Within the AIME 2025 take a look at, as an example, DeepSeek-R1-0528’s accuracy jumped from 70% to 87.5%, indicating deeper reasoning processes that now common 23,000 tokens per query in comparison with 12,000 within the earlier model.

Coding efficiency additionally noticed a lift, with accuracy on the LiveCodeBench dataset rising from 63.5% to 73.3%. On the demanding “Humanity’s Final Examination,” efficiency greater than doubled, reaching 17.7% from 8.5%.

These advances put DeepSeek-R1-0528 nearer to the efficiency of established fashions like OpenAI’s o3 and Gemini 2.5 Pro, in keeping with inside evaluations — each of these fashions both have charge limits and/or require paid subscriptions to entry.

UX upgrades and new options

Past efficiency enhancements, DeepSeek-R1-0528 introduces a number of new options aimed toward enhancing the person expertise.

The replace provides assist for JSON output and performance calling, options that ought to make it simpler for builders to combine the mannequin’s capabilities into their functions and workflows.

Entrance-end capabilities have additionally been refined, and DeepSeek says these adjustments will create a smoother, extra environment friendly interplay for customers.

Moreover, the mannequin’s hallucination charge has been decreased, contributing to extra dependable and constant output.

One notable replace is the introduction of system prompts. In contrast to the earlier model, which required a particular token at the beginning of the output to activate “pondering” mode, this replace removes that want, streamlining deployment for builders.

Smaller variants for these with extra restricted compute budgets

Alongside this launch, DeepSeek has distilled its chain-of-thought reasoning right into a smaller variant, DeepSeek-R1-0528-Qwen3-8B, which ought to assist these enterprise decision-makers and builders who don’t have the {hardware} essential to run the complete

This distilled model reportedly achieves state-of-the-art efficiency amongst open-source fashions on duties comparable to AIME 2024, outperforming Qwen3-8B by 10% and matching Qwen3-235B-thinking.

In keeping with Modal, working an 8-billion-parameter massive language mannequin (LLM) in half-precision (FP16) requires roughly 16 GB of GPU reminiscence, equating to about 2 GB per billion parameters.

Subsequently, a single high-end GPU with not less than 16 GB of VRAM, such because the NVIDIA RTX 3090 or 4090, is enough to run an 8B LLM in FP16 precision. For additional quantized fashions, GPUs with 8–12 GB of VRAM, just like the RTX 3060, can be utilized.

DeepSeek believes this distilled mannequin will show helpful for tutorial analysis and industrial functions requiring smaller-scale fashions.

Preliminary AI developer and influencer reactions

The replace has already drawn consideration and reward from builders and fans on social media.

Haider aka “@slow_developer” shared on X that DeepSeek-R1-0528 “is simply unbelievable at coding,” describing the way it generated clear code and dealing exams for a phrase scoring system problem, each of which ran completely on the primary strive. In keeping with him, solely o3 had beforehand managed to match that efficiency.

In the meantime, Lisan al Gaib posted that “DeepSeek is aiming for the king: o3 and Gemini 2.5 Professional,” reflecting the consensus that the brand new replace brings DeepSeek’s mannequin nearer to those high performers.

One other AI information and rumor influencer, Chubby, commented that “DeepSeek was cooking!” and highlighted how the brand new model is almost on par with o3 and Gemini 2.5 Professional.

Chubby even speculated that the final R1 replace may point out that DeepSeek is making ready to launch its long-awaited and presumed “R2” frontier mannequin quickly, as nicely.

Wanting forward

The discharge of DeepSeek-R1-0528 underscores DeepSeek’s dedication to delivering high-performing, open-source fashions that prioritize reasoning and value. By combining measurable benchmark good points with sensible options and a permissive open-source license, DeepSeek-R1-0528 is positioned as a priceless software for builders, researchers, and fans seeking to harness the most recent in language mannequin capabilities.

Source link